Getting started with an Eleventy blog on AWS

AWS S3 Cloudfront Bitbucket EleventyI recently decided to start a blog to write about the different projects I work on in my spare time. Since I just got the blog up and running I thought a good place to start would be to write about what technology I chose to use and how I set everything up.

Eleventy as the static site generator #

I wanted to create a simple (and cheap) site that would be easy to publish to either from the command line locally or from a CI/CD pipeline. This led me to look into different static site generators and I ended up deciding on Eleventy. I didn't do any detailed comparison of different generators, but simply went with Eleventy since it looked easy to get started with and seems to be very well tried and tested for different use cases.

To start with I simply followed the getting started guide on the 11ty website and ended up using the official eleventy base blog starter project to get quickly up and running. Basically all I had to do was download the starter project and run npx @11ty/eleventy to build the static site, while running npx @11ty/eleventy --serve builds and serves the site locally for testing.

Setting up the infrastructure on AWS #

The next step was to make the site public on the internet. I bought the domain on AWS and decided to serve it using an S3 bucket and Cloudfront as a CDN. I prefer keeping track of all infrastructure as code and decided to create a Cloudformation template to provision the necessary infrastructure for the site.

The first step was deciding on the parameters to define for the template, which would allow this to be used to provision different static sites on AWS. The name of the S3 bucket has to be unique, while the DNS name of the site has to be supplied to the Cloudfront distribution resource, so both were added as parameters. I wanted to use HTTPS so I needed to provision an SSL certificate as well. Although this can be done in Cloudformation it is necessary to manually add a DNS record for the domain to verify ownership, unless it is hosted on Route 53 on the same AWS account the certificate is provisioned. Although I have everything running on the same AWS account I decided to supply this as a parameter to better support cases where that is not the case. The initial part of the template looks as follows:

AWSTemplateFormatVersion: '2010-09-09'

Description: Infrastructure for static website with Cloudfront distribution passing traffic to S3 bucket

Parameters:

S3BucketName:

Type: String

DnsName:

Type: String

Description: The domain of the static website, e.g. blog.vkjelseth.com

SSLCertificateArn:

Type: String

Description: Arn of certificate for DnsName. Must be located in us-east-1 to work, since this is required by Cloudfront.

The next step was defining the resources. The S3 bucket definition is pretty straightforward with the bucket definition as a website and an access policy that allows public access to the bucket. The Cloudfront distribution setup defines the root of the S3 bucket as the origin, uses the certificate passed as a parameter to the stack and a managed caching policy that is supplied by AWS.

Resources:

S3_Bucket:

Type: 'AWS::S3::Bucket'

Properties:

BucketName: !Ref S3BucketName

AccessControl: PublicRead

WebsiteConfiguration:

IndexDocument: index.html

ErrorDocument: error.html

VersioningConfiguration:

Status: Enabled

S3BucketPublicReadPolicy:

Type: AWS::S3::BucketPolicy

Properties:

Bucket: !Ref S3Bucket

PolicyDocument:

Version: 2012-10-17

Statement:

- Action:

- 's3:GetObject'

Effect: Allow

Resource: !Join [ '', [ 'arn:aws:s3:::', !Ref S3Bucket, /* ] ]

Principal: '*'

Sid: 'PublicReadGetObject'

CloudFrontDistribution:

Type: AWS::CloudFront::Distribution

Properties:

DistributionConfig:

Origins:

- DomainName: !Select [2, !Split ["/", !GetAtt S3Bucket.WebsiteURL]] # Strip http:// from website URL

Id: 'S3'

CustomOriginConfig:

OriginProtocolPolicy: 'http-only'

Enabled: true

DefaultCacheBehavior:

TargetOriginId: 'S3'

AllowedMethods:

- 'GET'

- 'HEAD'

Compress: true

CachePolicyId: '658327ea-f89d-4fab-a63d-7e88639e58f6' # ID of managed policy CachingOptimized

ViewerProtocolPolicy: 'redirect-to-https'

Aliases:

- !Ref DnsName

ViewerCertificate:

AcmCertificateArn: !Ref SSLCertificateArn

MinimumProtocolVersion: 'TLSv1.2_2021'

SslSupportMethod: 'sni-only'

This could then be easily deployed locally using the AWS CLI. For example:

aws cloudformation deploy --template-file static-website.yaml --stack-name my-static-blog \\

--parameter-overrides S3BucketName=MyBlogBucketName DnsName=blog.mywebsite.com \\

SSLCertificateArn=arn:aws:acm:us-east-1:<account-id>:certificate/<certificate-guid>

AWS has tutorials and documentation that provide more details on how this can be set up.

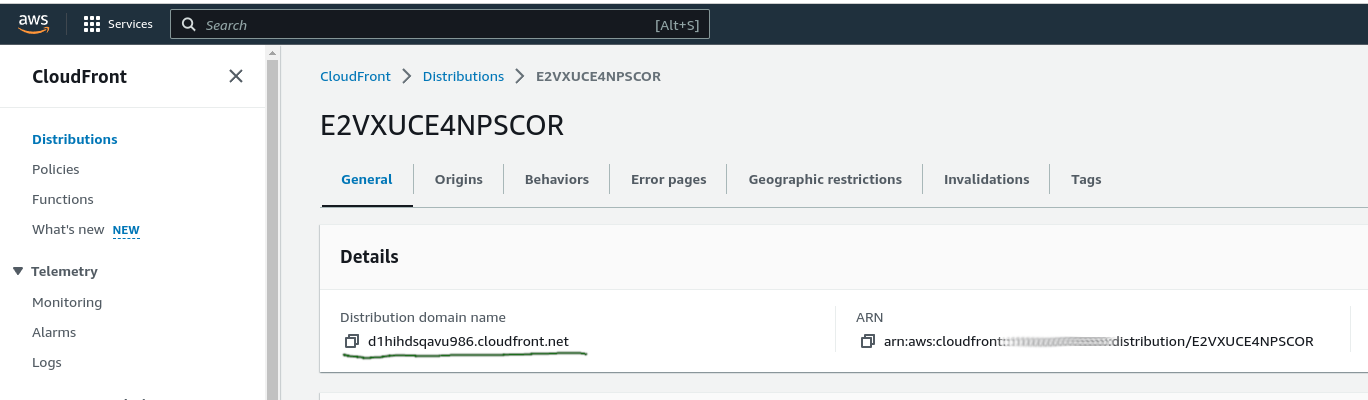

Once the infrastructure was up and running the final manual step was adding a CNAME record for the domain to point this to the DNS name of the Cloudfront distribution. This can be found by looking it up on the AWS console for the account it is deployed to.

Building and deploying the blog to AWS #

With the infrastructure set up the next step was creating a way to deploy the blog to AWS. Basically all that is necessary is to build the static site and to copy the generated content there. Eleventy deploys everything to the folder _site by default, something I have not changed, so running the build commmand and syncing this to S3 was all that was necessary. I also added a command to remove any old files before building the site and npm install to install dependencies to allow me to pull this from the git repo from a machine where it was not deployed beforehand and be able to run the deploy script. I created the following deploy script to achieve this.

#!/usr/bin/env bash

# Script to deploy website to S3 bucket.

# Assumes there are valid AWS credentials present in the environment

bucket_name='my-static-blog'

rm -rf _site

npm install

npx @11ty/eleventy

aws s3 sync --delete _site s3://$bucket_name/

Setting up a CI/CD pipeline in Bitbucket #

Although I will mostly be deploying changes locally for a personal project like this I prefer having this set up in a separate pipeline as well. I do not have any tests to run against new blog deployments so this will really just be a deployment pipeline for pushing changes from Bitbucket, where I am hosting the code. It might seem a bit excessive, but I will be doing it for both the blog and infrastructure repo and it allows me to easily redeploy everything from anywhere without having access to my local machine.

To start with I needed to allow Bitbucket access to my AWS account. A common way of doing this is to create a user with the necessary permissions and generate security credentials that can be used from Bitbucket to deploy to AWS. Since the pipeline has to have access to provision all resources you want to deploy, this creates long lived credentials that can be greatly misused if they were to fall in the hands of an attacker. To avoid or at least alleviate the risk of this happening it is recommended to use short lived credentials.

For personal users it is recommended to enforce MFA and on my personal machine I only have credentials that allow me to generate short lived credentials. This can be done in a number of ways, but I use aws-mfa, which makes it easy generate credentials while maintaining security. Having to manually enter an MFA code everytime you run a pipeline would defeat some of the purpose of having a pipeline in the first place, but it is possible to use OpenID Connect by configuring Bitbucket Pipelines as a Web identity Provider on AWS. The linked article provides more details on how this is set up, but basically you configure a role that Bitbucket is allowed to assume and Bitbucket generates short lived credentials for the given role when running a pipeline. This can be set up for use with Github Actions as well.

With this set up I was ready to write and test the deployment pipelines. I started by setting this up for the infrastructure repo. I did this by defining a step that can be used by different pipelines for deploying stacks to AWS. This was done using a Bitbucket pipe that handles the generation of the short-lived credentials for access to AWS and basically performs the same deployment command that I wrote out above. The stack parameters and the OIDC role are passed to the pipe through repository variables that I defined for the Bitbucket repo. I then created a custom pipeline that uses this step and can be manually triggered for any branch in the repository. I don't need automatic deployments for my personal projects, at least not yet, but this can easily be extended to automatically trigger the deployment step after every merge to the master branch.

---

definitions:

steps:

- step: &deploy-to-aws

name: Deploy stacks to environment

oidc: true

script:

- pipe: atlassian/aws-cloudformation-deploy:0.15.0

variables:

STACK_NAME: 'vkjelseth-blog-infrastructure'

TEMPLATE: 'aws/static-website.yml'

AWS_OIDC_ROLE_ARN: $AWS_OIDC_ROLE

WAIT: 'true'

STACK_PARAMETERS: >

[{

"ParameterKey": "S3BucketName",

"ParameterValue": "$PERSONAL_BLOG_S3_BUCKET_NAME"

},

{

"ParameterKey": "DnsName",

"ParameterValue": "$PERSONAL_BLOG_DNS"

},

{

"ParameterKey": "SSLCertificateArn",

"ParameterValue": "$PERSONAL_BLOG_SSL_CERTIFICATE_ARN"

}]

pipelines:

custom:

deploy-to-aws:

- step: *deploy-to-aws

name: Deploy stacks to AWS environment

The final step was adding a pipeline for the blog repository. For this I simply created the deployment step directly in the custom pipeline. The site is built by installing dependencies and running npx @11ty/eleventy, while the deployment is done by using a Bitbucket pipe for deploying to AWS.

pipelines:

custom:

deploy-blog-to-s3-bucket:

- step:

name: Build and deploy website to S3 bucket on AWS

image: node:18.12.1-alpine

oidc: true

script:

- npm install

- npx @11ty/eleventy

- pipe: atlassian/aws-s3-deploy:1.1.0

variables:

AWS_OIDC_ROLE_ARN: $AWS_OIDC_ROLE

S3_BUCKET: $PERSONAL_BLOG_S3_BUCKET_NAME

LOCAL_PATH: '_site'

WAIT: 'true'