Improved static site deployment with Cloudfront cache invalidation

AWS Cloudfront S3 BitbucketI recently created this site using Eleventy as the static site generator, hosting on AWS S3 and Cloudfront as the CDN. For more information on how I set everything up see my previous blog post. I have used S3 and Cloudfront to host static content before, but I have not previously deployed changes to existing static content that I expected to be immediately available. Once I started this blog, however, I noticed that although new changes were immediately deployed to the S3 bucket it took longer for the content to be available through Cloudfront.

The reason this is happening is because Cloudfront caches the content according to a given caching policy. When I set this up I used the AWS managed caching policy, CachingOptimized, that by default uses a Time-to-live for cached content of 24 hours. The purpose of this is to serve the site faster without fetching content from S3 for every request. Although this works as intended I still want to make newly deployed blog content immediately available through Cloudfront. This can be achieved by invalidating the cache, which forces Cloudfront to fetch from S3 the next time a request is made.

Cache invalidation in deployment script #

I started off by adding this to my existing blog deployment script. The first step was figuring out how to invalidate the cache using the AWS CLI. This can be done with the following command:

aws cloudfront create-invalidation --distribution-id <cloudfront-distribution-id> --paths "/*"

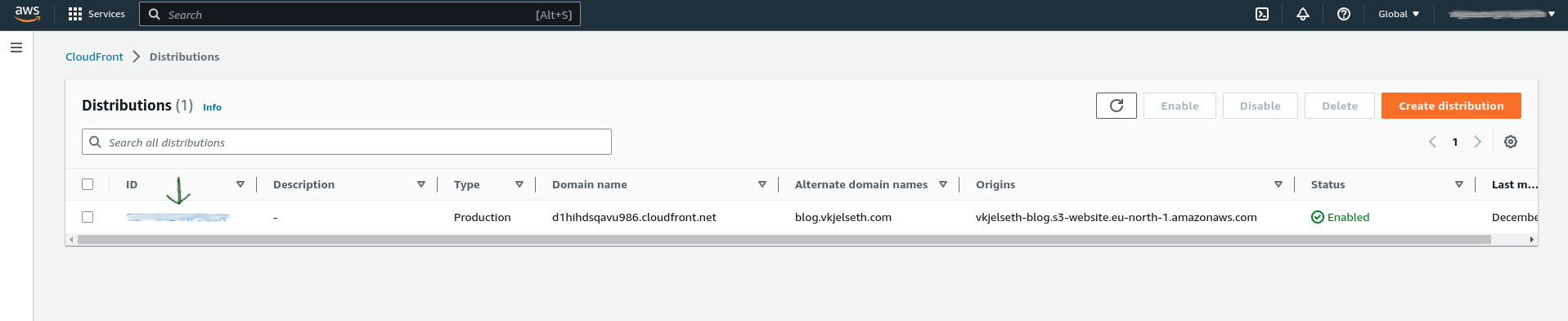

This invalidates the cache for all paths for the cloudfront distribution with the given ID. The Cloudfront distribution ID can be looked up on the AWS web console:

With this sorted out I simply added the CLI command as the last command of my deployment script.

#!/usr/bin/env bash

# Script to deploy website to S3 bucket.

# Assumes there are valid AWS credentials present in the environment

bucket_name='your-bucket-name'

cloudfront_distribution_id='your-cloudfront-distribution-id'

rm -rf _site

npm install

npx @11ty/eleventy

aws s3 sync --delete _site s3://$bucket_name/

aws cloudfront create-invalidation --distribution-id $cloudfront_distribution_id --paths "/*"

Now the Cloudfront cache is invalidated after every deploy with this script, which makes the changes immediately available like I wanted.

Bitbucket pipeline #

In addition to adding the cache validation to the deployment script I wanted to add this to my Bitbucket pipeline as well. In a previous blog post I described how Open ID Connect is used to authenticate against AWS from the pipeline. For deploying the site I used a Bitbucket pipe, which extracts and uses the generated web identity token when syncing with S3.

In this case I used the CLI command for invalidating the cache and not a Bitbucket pipe. That meant that I needed to create a file that includes the web identity token that Bitbucket has generated and use an image that has the AWS CLI installed. See the Bitbucket documentation that describes this in further detail.

It is also necessary to have the following environment variables defined:

- AWS_REGION

- Required by the AWS CLI to specify the region the command is run against. I already have this defined as a workspace variable.

- AWS_ROLE_ARN

- Required by AWS to know which Role is assumed. I already have a workspace variable that defines this for Bitbucket in the variable AWS_OIDC_ROLE. The variable AWS_ROLE_ARN has to be created inside the pipeline with the value from AWS_OIDC_ROLE.

- AWS_WEB_IDENTITY_TOKEN_FILE

- Required by the AWS CLI to know where the file containing the web identity token is located. This has to be created and populated with the token inside the pipeline.

- CLOUDFRONT_DISTRIBUTION_ID

- The id of the cloudfront distribution used for the blog. This is not strictly necessary to specify as an environment variable, but is required by CLI command to invalidate the cache. I will specify this as a repository variable for the blog repo.

With the repository variables in place I added a second step to my Bitbucket pipeline to invalidate the cache after deploying the site to S3. The complete pipeline is shown below and I am now able to deploy the site and invalidate the cache both locally and through my Bitbucket pipeline.

pipelines:

custom:

deploy-blog-to-s3-bucket:

- step:

name: Build and deploy website to S3 bucket on AWS

image: node:18.12.1-alpine

oidc: true

script:

- npm install

- npx @11ty/eleventy

- pipe: atlassian/aws-s3-deploy:1.1.0

variables:

AWS_OIDC_ROLE_ARN: $AWS_OIDC_ROLE

S3_BUCKET: $PERSONAL_BLOG_S3_BUCKET_NAME

LOCAL_PATH: '_site'

WAIT: 'true'

- step:

name: Invalidate Cloudfront cache

image: amazon/aws-cli

oidc: true

script:

- export AWS_ROLE_ARN=$AWS_OIDC_ROLE

- export AWS_WEB_IDENTITY_TOKEN_FILE=$(pwd)/web-identity-token

- echo $BITBUCKET_STEP_OIDC_TOKEN > $(pwd)/web-identity-token

- aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_DISTRIBUTION_ID --paths "/*"

- Previous: Getting started with AWS and CLI tools